Mainframe security is the discipline of protecting data, identities, and workloads on IBM Z systems running z/OS.

It encompasses access control via External Security Managers (ESMs) such as RACF, ACF2, and Top Secret, data protection through encryption and tokenization, activity monitoring for threat detection, and compliance management for frameworks such as PCI DSS 4.0, DORA, and HIPAA.

In 2026, mainframe security is shifting from perimeter-centric controls to data-centric protection, i.e., securing sensitive information at the field level so that even if access controls fail, the data itself is worthless to an attacker.

This guide covers the core controls, modern protection approaches, compliance requirements, and the evolving threat landscape that security leaders need to navigate.

For decades, mainframes were considered inherently secure, a "fortress" of enterprise IT. That reputation was earned. IBM Z's architecture delivers tightly controlled access, centralized data management, and hardware-level integrity that no distributed system can match.

But in 2026, the fortress myth is the threat. Over 53% of organizations now express concern about mainframe security, according to the 2025 Arcati Mainframe User Survey. Not because the platform has weakened, but because the attack surface around it has expanded.

Mainframe security is not general cybersecurity applied to a big computer. It operates within z/OS-specific constructs: the System Authorization Facility (SAF) routes security calls to an External Security Manager (ESM), which enforces access policies across datasets, transactions, and system resources.

Activity generates System Management Facilities (SMF) records that feed monitoring and audit. Cryptographic operations run through the Integrated Cryptographic Service Facility (ICSF) and dedicated Crypto Express hardware.

These are not interchangeable with cloud IAM roles, endpoint agents, or SIEM connectors; rather, they’re platform-native, and any mainframe security strategy must work within them – or around them – without disruption.

The modern imperative adds a layer. Mainframes now connect to cloud analytics platforms, API gateways, SaaS applications, and distributed databases.

The 2024 Mainframe Market Pulse study found that heavily regulated sectors report 4.7 times more vulnerabilities when external technologies are integrated with the mainframe.

Protecting the system is no longer enough. You need to protect the data itself, regardless of where it flows.

Mainframe security is layered. Each control addresses a distinct attack surface, and no single layer is sufficient on its own. The table below maps the seven primary control layers to their function, tooling, and protection scope.

Access control through ESMs is the foundation. RACF, ACF2, and Top Secret each provide authentication, authorization, and auditing, but they primarily answer one question: who can access what.

They do not, by themselves, solve data exposure problems. Sensitive fields copied into downstream datasets, extracted into distributed analytics, or shared across non-production environments remain unprotected by ESMs alone.

Data encryption adds a second layer. IBM's Pervasive Encryption uses hardware-accelerated cryptography via Crypto Express cards and CPACF to encrypt datasets, coupled with facilities and network connections with minimal performance impact.

But encryption is reversible, i.e., anyone with key access can decrypt. Tokenization and dynamic data masking operate differently.

Tokenization replaces sensitive values with non-sensitive substitutes stored in an isolated vault. There is no mathematical relationship between the token and the original value. Dynamic masking obfuscates data in real time based on user roles during active sessions.

Historically, these controls operated independently. Modern Data Security Platforms (DSPs) are emerging to unify discovery, classification, and protection across all layers – including legacy mainframe environments – under a single policy engine.

For a detailed breakdown of z/OS security systems, see our mainframe security controls guide. To compare specific tools, see the mainframe security tools comparison.

This is the decision that defines your mainframe security architecture. Both approaches are valid, but they solve different problems and carry different operational risks.

Agent-based tools provide deep visibility into z/OS internals.

They can audit RACF configurations, monitor system calls, and detect unauthorized changes at the operating system level. For compliance use cases that require proof of access control enforcement, they are essential.

The tradeoff is operational risk. Installing any new software on a production mainframe triggers what is effectively a three-way standoff. Mainframe operations teams prioritize stability and 99.999% uptime. Security teams push for immediate risk reduction.

Application owners need feature velocity and resist anything that could break legacy workflows. The rightly protective stance of mainframe teams means any proposed change faces a long cycle of testing and approval.

Agentless solutions bypass this friction entirely.

The mainframe is never touched. No change management tickets, no regression testing against decades-old COBOL applications, no approval delays. Protection is applied in the network path as data flows to and from the mainframe.

In a production deployment, a national telecommunications company used this approach to secure cleartext customer data in IBM DB2 databases and protect live TN3270 terminal sessions – without installing a single line of code on z/OS or impacting mainframe performance. See the full Securing Legacy Mainframe Data case study.

The cost dynamics differ significantly as well. Agent-based tools carry per-MIPS licensing, testing overhead, and ongoing maintenance burden.

Most mature mainframe environments will use both approaches. Agent-based tools for access control auditing and vulnerability scanning.

Agentless tools for data protection, tokenization, masking, and compliance scope reduction. The key is understanding which problems each approach actually solves.

Protecting mainframe data requires a layered strategy that addresses data at rest, in transit, and in use. The three core techniques – i.e., encryption, tokenization, and data discovery – serve different purposes and are not interchangeable.

IBM's Pervasive Encryption provides hardware-accelerated cryptographic protection for datasets at rest and network connections in transit.

It leverages Crypto Express cards and the Central Processor Assist for Cryptographic Function (CPACF) to minimize performance overhead. AT-TLS (Application Transparent Transport Layer Security) handles encryption for network traffic without requiring application changes.

IBM Z also integrates quantum-safe algorithms directly into its cryptographic processors, offering early protection against harvest-now-decrypt-later attacks.

However, adoption remains low. The 2025 Arcati Mainframe User Survey reports that only 7% of organizations have implemented quantum-safe cryptography.

For organizations not yet on the latest IBM Z hardware, data-layer protection through tokenization provides an alternative path to quantum resilience.

Tokenized values have no mathematical relationship to the original data and cannot be reverse-engineered regardless of computing power.

Encryption transforms data mathematically and is reversible with the correct key. Tokenization replaces sensitive values with non-mathematical substitutes stored in a separate vault.

Both are used in mainframe environments, but tokenization uniquely reduces PCI DSS audit scope because the original sensitive data no longer exists in the protected system.

For a deeper dive into encryption approaches, see the mainframe encryption guide.

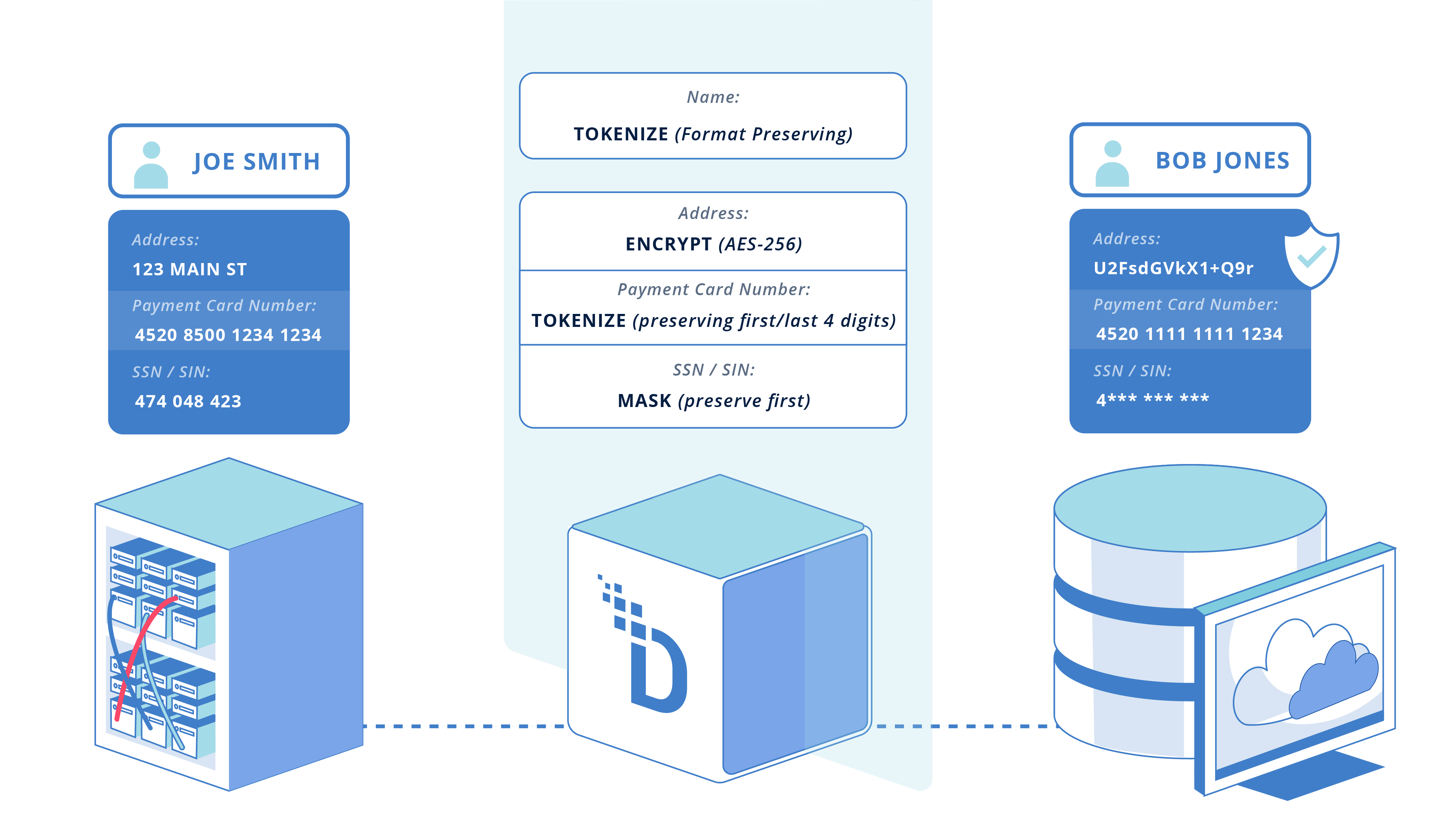

Tokenization replaces sensitive data – i.e., credit card numbers, Social Security numbers, patient identifiers – with format-preserving tokens that maintain the original data's structure.

This is critical for mainframe environments where COBOL applications enforce rigid field widths and validation rules.

A tokenized credit card number must still pass Luhn algorithm checks. A tokenized SSN must maintain its 9-digit format.

Without format preservation, legacy applications reject the protected data and business processes break. Vaulted, format-preserving tokenization solves this by generating tokens that satisfy application logic while removing all sensitive information from the system.

Dynamic Data Masking (DDM) protects data in real time during active sessions. An authorized call center agent might see a complete customer record, while a junior analyst sees a masked version (e.g., XXX-XX-1234).

DDM integrates with Identity and Access Management (IAM) systems, such as Active Directory, to enforce role-based masking policies.

In a production deployment, a telecom enterprise used vaulted, format-preserving tokenization to secure sensitive customer data in IBM DB2 databases.

DDM simultaneously protected data during live TN3270 terminal sessions, enforcing role-based access – all without a single code change to legacy COBOL applications. See our case study on securing legacy mainframe data for the full story.

Non-production environments – i.e., development, testing, User Acceptance Testing (UAT), and analytics sandboxes – are frequent targets for breaches.

They typically operate with weaker access controls and less monitoring than production systems. When production mainframe data is copied into these environments for testing, the sensitive information travels with it.

This is a significant and underestimated risk. Data breaches involving multiple environments cost an average of $5.05 million, compared to $4.01 million for breaches confined to on-premises production systems.

Modern test data management addresses this by protecting data at the point of extraction. The process reads from production, applies in-flight tokenization and masking, and writes only de-identified data to downstream environments.

Referential integrity is preserved across tables and keys, ensuring applications behave realistically without exposing real PII.

A global insurer deployed this approach to protect sensitive data across its non-production environments, eliminating the compliance gap between production and dev/test without disrupting developer workflows.

Mainframes remain the processing backbone for industries that handle the highest volumes of sensitive data. Each sector faces distinct security and compliance pressures.

Core banking systems on z/OS process billions of transactions daily. Well over half of Fortune 500 financial institutions rely on mainframes for mission-critical workloads.

PCI DSS 4.0 now requires tokenization or encryption of stored cardholder data (Requirement 3.4), and the Digital Operational Resilience Act (DORA) mandates ICT risk management and incident reporting for EU financial services firms. Mainframe security is audit-mandated.

Policy administration, claims processing, and actuarial systems frequently run on mainframe databases that still hold cleartext PII.

Insurance companies face dual pressure: protecting production data for regulatory compliance and securing the non-production copies used by actuarial teams, QA testers, and offshore developers. A global insurer used agentless tokenization to protect sensitive data in non-production environments while maintaining full testing functionality.

Another insurer enforced data residency compliance by tokenizing customer data before it crossed borders to a US-hosted Salesforce deployment.

Patient records in legacy mainframe systems are subject to HIPAA requirements, including PHI encryption, access controls, audit trails, and multi-factor authentication (MFA).

Cross-system data sharing between mainframes and Electronic Health Record (EHR) platforms introduces additional exposure risk.

Benefits processing, tax systems, and defence applications run on mainframes across federal and state agencies.

Emerging US federal requirements are tightening mainframe obligations. Executive Order 14117 restricts bulk transfers of sensitive US data to foreign adversaries, requiring data classification and export controls.

The Federal Information Security Modernization Act (FISMA) mandates continuous cybersecurity planning, and the Criminal Justice Information Services (CJIS) standards require encryption, access auditing, and MFA for criminal justice data.

Billing, subscriber management, and network provisioning systems run on mainframes processing millions of daily transactions.

Legacy COBOL applications often contain PAN and PII in cleartext. A national telecom secured its entire mainframe data estate – DB2 databases and live terminal sessions – using agentless tokenization, without modifying a single legacy application.

Land management data, SCADA integration points, and customer billing systems on mainframes require protection under both industry-specific regulations and general data privacy frameworks. See how enterprises approach mainframe modernization in these environments.

The threat landscape for mainframe environments has changed materially in the past two years.

Five risks deserve attention.

Security improvement is now the #2 priority for mainframe teams, trailing only talent and training, according to the 2025 Arcati survey.

Yet 77% of organizations rely on internal upskilling rather than hiring – a reflection of both a talent shortage and the reality that mainframe security requires deep contextual expertise that cannot be quickly acquired.

Security skills are in demand by 49% of organizations, and the pool of specialists continues to shrink as senior mainframe professionals retire.

Heavily regulated sectors report 4.7 times more vulnerabilities when external technologies are integrated with the mainframe.

Cloud connectors, APIs, and cross-platform integrations create new attack surfaces that bypass traditional access controls.

Every API call to a mainframe back-end is a data flow that needs protection. Every replication job to a cloud data lake is a potential exposure point.

The traditional model of isolating the mainframe is no longer viable when the business requires its data to flow continuously to modern platforms.

Ransomware ranks as the #3 risk area for mainframe organizations in the 2025 Arcati survey. IBM's Cost of a Data Breach report documents an average of 204 days to detect a breach and 73 additional days to recover.

Malicious actors can establish backdoors, compromise backups, and exfiltrate data during that detection gap. For mainframe data, the preemptive defence is not just encryption; it is ensuring data is tokenized so that even exfiltrated information is worthless without access to the vault.

AI-powered reconnaissance enables attackers to probe z/OS configurations at scale. But AI is also emerging as a defence tool, e.g., anomaly detection, predictive maintenance, and automated incident response.

Harvest-now-decrypt-later attacks represent a long-term threat to encrypted mainframe data. Only 7% of organizations have implemented quantum-safe cryptography.

IBM Z's latest hardware supports quantum-safe algorithms, but organizations running older hardware need alternative approaches.

Tokenization provides quantum resilience by design: i.e., tokens that have no mathematical relationship to the original data and cannot be broken by any amount of computing power.

For strategies to protect data during mainframe-to-cloud migrations, see our complete guide.

Compliance is the primary budget driver for mainframe security investment. The table below maps nine active regulatory frameworks to their mainframe relevance and key requirements.

Data residency is an increasingly important compliance consideration for mainframe environments.

When mainframe data is replicated to cloud platforms hosted in other jurisdictions, organizations must ensure sensitive data is neutralized before crossing borders. This applies to GDPR for EU data, PIPEDA for Canadian data, and emerging frameworks across APAC.

A Canadian enterprise deployed tokenization to enable Salesforce Marketing Cloud adoption without violating data residency requirements.

Customer PII was replaced with format-preserving tokens before crossing the border, preserving full platform functionality while ensuring no real data left the jurisdiction.

The critical compliance strategy across all frameworks is scope reduction.

Tokenization removes sensitive data from in-scope systems entirely, reducing audit complexity, shortening assessment cycles, and concentrating compliance effort on the vault rather than every system that touches the data.

DataStealth provides agentless data protection for mainframe environments.

The platform operates in the network traffic flow to discover, classify, and protect sensitive data, all without installing agents on z/OS, without modifying legacy COBOL applications, and without impacting mainframe performance.

Implemented in production environments across banking, insurance, telecommunications, and government, DataStealth is a proven data security platform.

Organizations across regulated industries have deployed DataStealth to reduce PCI DSS scope, enforce data residency, and secure mainframe-to-cloud data pipelines.

Capabilities include data discovery and classification, format-preserving tokenization, dynamic data masking, encryption, cross-border data residency enforcement, and unified protection across mainframe, cloud, and SaaS environments.

Mainframe security is the discipline of protecting data, identities, and workloads on IBM Z systems running z/OS.

It encompasses access control via External Security Managers (RACF, ACF2, Top Secret), data protection via encryption and tokenization, activity monitoring for threat detection, and compliance management for frameworks such as PCI DSS 4.0 and DORA.

The modern priority is data-centric, i.e., securing the data itself so it remains protected regardless of where it flows.

Mainframes process over 90% of credit card transactions, 68% of global production IT workloads, and hold core banking data for the majority of Fortune 500 financial institutions.

Their concentration of high-value data makes them a prime target for attackers, while their integration with cloud and API ecosystems creates new attack surfaces that did not exist when these systems were first deployed.

All three are External Security Managers (ESMs) for z/OS. RACF is IBM's built-in solution, included with z/OS at no additional cost. ACF2 and Top Secret are third-party alternatives from Broadcom, each offering different rule structures and management interfaces.

RACF uses a resource-centric model, ACF2 uses a user-centric model, and Top Secret uses a policy-based model. The choice depends on organizational history and operational preferences.

Yes. Agentless mainframe security solutions operate in the network layer, intercepting data as it flows to and from the mainframe.

This approach protects sensitive data through tokenization, encryption, or masking without installing software on z/OS – eliminating the risk of destabilizing legacy applications or triggering complex change management processes.

Pervasive Encryption is IBM's built-in capability to encrypt data at rest (datasets, coupling facilities) and in flight (network connections) using hardware-accelerated cryptography.

It leverages Crypto Express cards and the Central Processor Assist for Cryptographic Function (CPACF) to minimize performance impact. It protects data at the infrastructure level but does not provide field-level tokenization or dynamic masking capabilities.

Mainframe security operates within z/OS-specific constructs – i.e., SAF calls, ESM profiles, SMF records, and ICSF cryptographic services.

Cloud security uses different identity models (IAM roles, OAuth), encryption approaches (KMS, envelope encryption), and monitoring tools.

They are not interchangeable. Hybrid environments require both, coordinated under a unified data protection strategy that applies consistent policies regardless of where data resides.

PCI DSS 4.0 requires encryption or tokenization for card data on mainframes.

DORA mandates ICT risk management for EU financial services. HIPAA requires PHI protection, including audit trails, and SOX demands financial data integrity.

GDPR applies when mainframes process EU personal data. FISMA requires continuous cybersecurity planning for federal systems. Each has mainframe-specific implementation requirements.

Costs vary significantly by approach. IBM RACF is included with z/OS at no extra cost.

Third-party ESMs like ACF2 and Top Secret carry annual licensing fees, typically priced per MIPS.

Agent-based data protection tools add MIPS overhead, testing costs, and change management burden. Agentless solutions avoid the hidden costs of on-mainframe deployment.

One enterprise facing a 4X price increase from its incumbent vendor achieved a 20% cost reduction by switching to an agentless architecture.

No. The 2025 Arcati Mainframe User Survey shows that 35% of organizations have already invested in mainframe security enhancements, including encryption and zero-trust frameworks.

The real risk is not obsolescence – it is neglect.

Systems that remain outside modernization cycles lose visibility, patch management, and compliance alignment. Forward-thinking organizations treat the mainframe as a living platform that requires continuous security investment.

Protect the data at the source, before it leaves the mainframe.

Tokenization replaces sensitive values with non-sensitive tokens during replication, so downstream cloud systems – e.g., analytics platforms, data lakes, SaaS applications – receive only de-identified data. If the cloud environment is compromised, the stolen data is worthless without access to the tokenization vault.